Explaining the capabilities of MeTTa, an AI programming language

When people communicate with each other, we use language—a system of signs and symbols used to transmit meaning or information.

Computer and programming languages are essentially the same thing: a vocabulary and set of grammatical rules and symbols we can use to communicate with a computer or computing device and instruct it to perform specific tasks.

However, there is one fundamental difference between the two. Computers need extremely specific instructions to perform tasks. Therefore, programming languages are generally closed, fixed and strict to avoid confusion and mistakes.

While this has allowed developers to create amazing, revolutionary programs and applications, it has also imposed a linear system for computer logic, preventing them from being capable of reasoning and inference.

These limitations have become ever more significant with current advancements in artificial intelligence.

Although developers have achieved amazing narrow AI applications with these languages, AGI will require a broader solution that enables it to perform cognitive functions just like the human brain does.

That is where MeTTa comes in.

Introducing MeTTa, OpenCog and the Atomspace

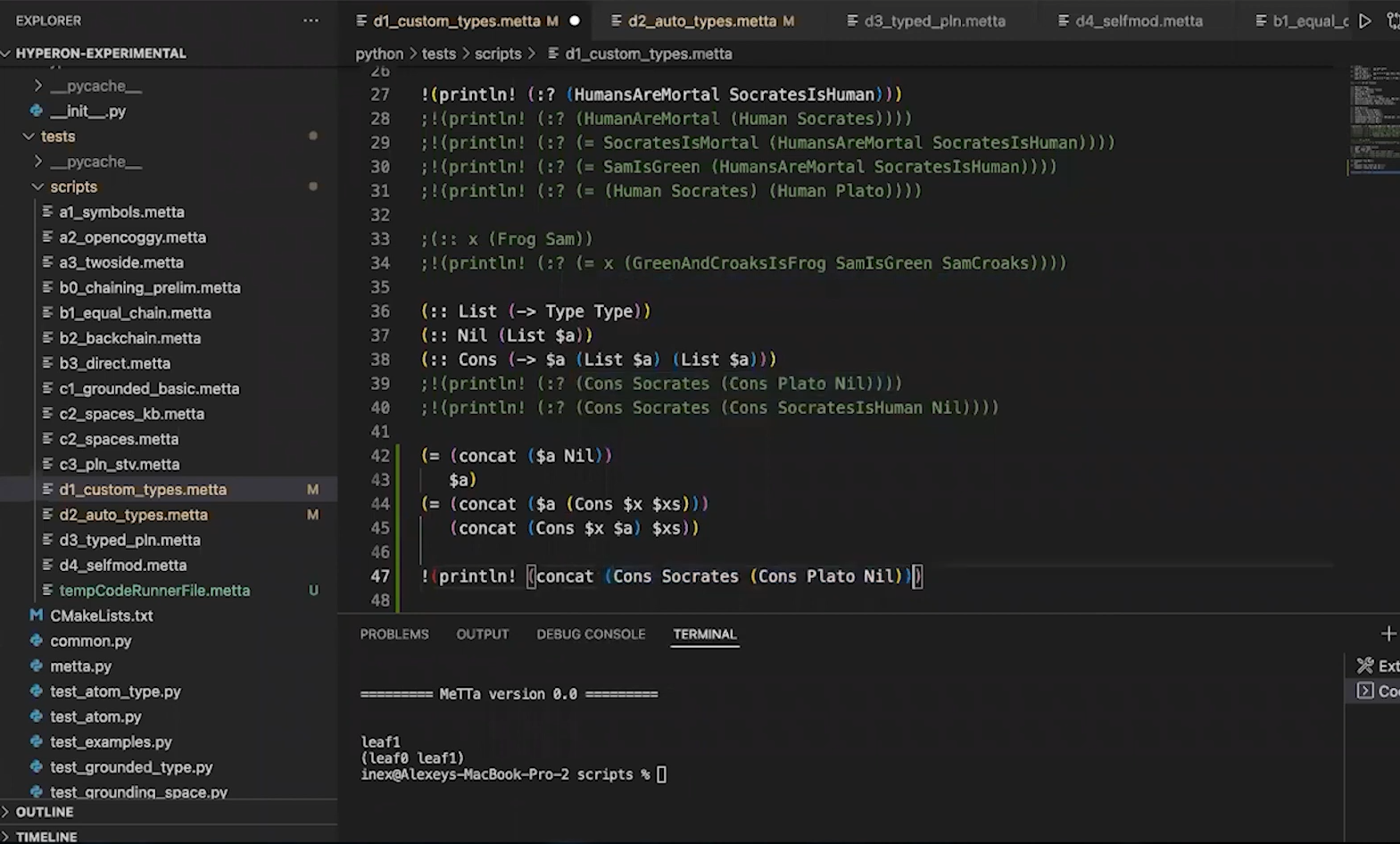

MeTTa (Meta Type Talk) is a novel programming language designed as a meta-language with very basic and general facilities for handling symbols, groundings, variables, types, substitutions and pattern matching.

To explain the capabilities and potential of this innovative language, it’s important to understand the environment it is located in: OpenCog Hyperon.

Understanding OpenCog Hyperon, the Atomspace, and MeTTa

OpenCog Hyperon is an advanced software development framework that aims to support the creation of systems with artificial general intelligence (AGI) at or beyond human-level capabilities.

OpenCog’s core design feature is the Atomspace, a distributed metagraph consisting of nodes and links labeled with various types of information.

In other words, the Atomspace structures data in a way that enables artificial intelligence to conduct different critical operations using less resources, improving execution time and memory space.

Multiple AI algorithms originating from different paradigms—such as logical reasoning, probabilistic programming, attractor neural networks, and evolutionary learning—are then implemented leveraging the Atomspace for internal representation and cross-algorithm communication.

In short, OpenCog Hyperon aims to achieve greater scalability and usability for AGI development, and to leverage tools and methods from different branches of math in the system design.

Learn more about how TrueAGI leverages OpenCog Hyperon’s framework here.

MeTTa, a Critical Part of OpenCog Hyperon

MeTTa serves as both the language for internal use by Hyperon algorithms, and the end-user language for developers to use in coding algorithms and applications built with Hyperon.

The goal for MeTTa is not to manipulate knowledge metagraphs, but rather to represent it in the metagraph knowledge metagraphs, enabling knowledge metagraphs to flexibly self-transform in a cognitively useful and meaningful way.

Indeed, MeTTa is considered a viable “language of thought” for AGI because it allows for representation of various types of knowledge and cognitive processes. With MeTTa, knowledge can be transformed in a meaningful way within knowledge metagraphs, which is an important aspect of the overall OpenCog and Hyperon programs.

In other words, this programming language is specifically designed to enable computers to reason and infer knowledge like the human brain.

Now, let’s dive deeper into how exactly MeTTa makes this possible.

An Overview of MeTTa’s Elements: Symbols, Types, and Expressions

MeTTa’s syntax is different from other programming languages to allow computers to navigate through knowledge graphs as a human mind would.

Basic atomic elements of the MeTTa language are called symbols, which can be grounded, meaning that they are references to some external pieces of data (which can contain executable code as well).

Symbols are composed into expressions, which can be represented as trees. Any expression is a valid term by default unless there are constraints imposed by types. An example of an expression could be:

(possesses Sam balloon)

(has-color balloon blue)

(likes Sam blue)

When expressions are in the Atomspace, they constitute a program. Since the Atomspace knowledge graph supports a special form of pattern matching, it can extract expressions that match the given query expression. In other words, MeTTa can use different Atomspaces to store its programs and to retrieve expressions through pattern matching.

Finally, symbols are gradually typed. Types are used in the MeTTa programming language to impose restrictions on expressions accepted as valid terms.

The built-in symbol “:” (colon) can be used in MeTTa to build expressions definitionally relating symbols to their types. Consider the following expression:

(: Sam Human)

This allows the symbol “Sam” to appear in such places in expressions that expect symbols typed as “Human” throughout the Atomspace. This means that every time the “Human” type is referenced, the AI can infer that Sam is related to the expression being queried, and thus produce a more representative response.

Now, let’s combine all these elements into one expression.

(: Sam Human)

(possesses Sam balloon)

(has-color balloon blue)

(likes Sam blue)

Through this expression, a computer could learn from experience and generalize knowledge by inferring that all humans who own blue elements are likely to have a preference for the color blue.

For example, if Sam was planning to buy a car, and we wanted to know what car color he would pick without providing further context, a narrow AI language models would produce a response similar to this:

“I apologize, but I cannot predict Sam’s car color preference. However, according to a recent study conducted by Axalta Coating Systems, the most popular car colors in 2020 were white, black, and gray, accounting for over 75% of new car sales globally. Other popular car colors included silver, red, and blue. Ultimately, the decision on what car color to choose is highly subjective and varies from person to person.”

Contrarily, an LLM built with MeTTa and the OpenCog Hyperon framework would apply what it learned about Sam and guess that he will buy a blue car, even if the data it’s been trained on doesn’t include any information about this.

Capabilities of the MeTTa Programming Language

MeTTa’s innovative syntax and architecture, along with its integration with the OpenCog Hyperon framework, allows us to build computers that can navigate data stored knowledge graphs using logic, inference, and reasoning.

Where regular LLMs like ChatGPT produce responses based on mere probability and pattern finding, MeTTa-powered models can simulate “thinking” processes, learn from experience and apply logic and reasoning like a human would do to produce quality representations of knowledge and accurate responses to the users’ queries.

In this article, we’ve outlined only the most basic aspects of MeTTa, but its capabilities and potential for artificial general intelligence go far beyond these lines.

It’s by exploiting this potential that TrueAGI aims to build tailored solutions for enterprises to integrate artificial general intelligence to their business processes, unlocking a new era of efficiency, productivity, and automation.

Subscribe to our Newsletter

Email Address