The future of intelligence

starts here

trueAGI is creating the world's first

artificial human-level intelligence.

artificial human-level intelligence.

We are on the threshold of a new kind of technology: one that truly understands, adapts, and evolves. One that learns and grows over time. One built not just to power innovation, but to help solve the world’s most complex and urgent challenges. One that is grounded on the Pillars of Humanity's BEST Interests: Benevolence, Ethics, Safety, and Trust.

We're taking a revolutionary approach

trueAGI solutions are the building blocks of near-future technology beyond anything humanity has ever seen. Today's mainstream generative AI leaves trillions of dollars of value – and humanity's toughest challenges – locked behind its limitations. In contrast, full Artificial General Intelligence will:

Reason and learn from experience

Make major cognitive leaps

Adaptively work with nuance and complexity

Exhibit strategic thinking and decision-making

Infer beyond explicit training data

Intelligently manage and coordinate other AIs

Unique capabilities with powerful benefits

Right now, trueAGI gives enterprise teams, and the populations they serve, AGI-based components capable of powering real-world value.

Each tailored trueAGI solution:

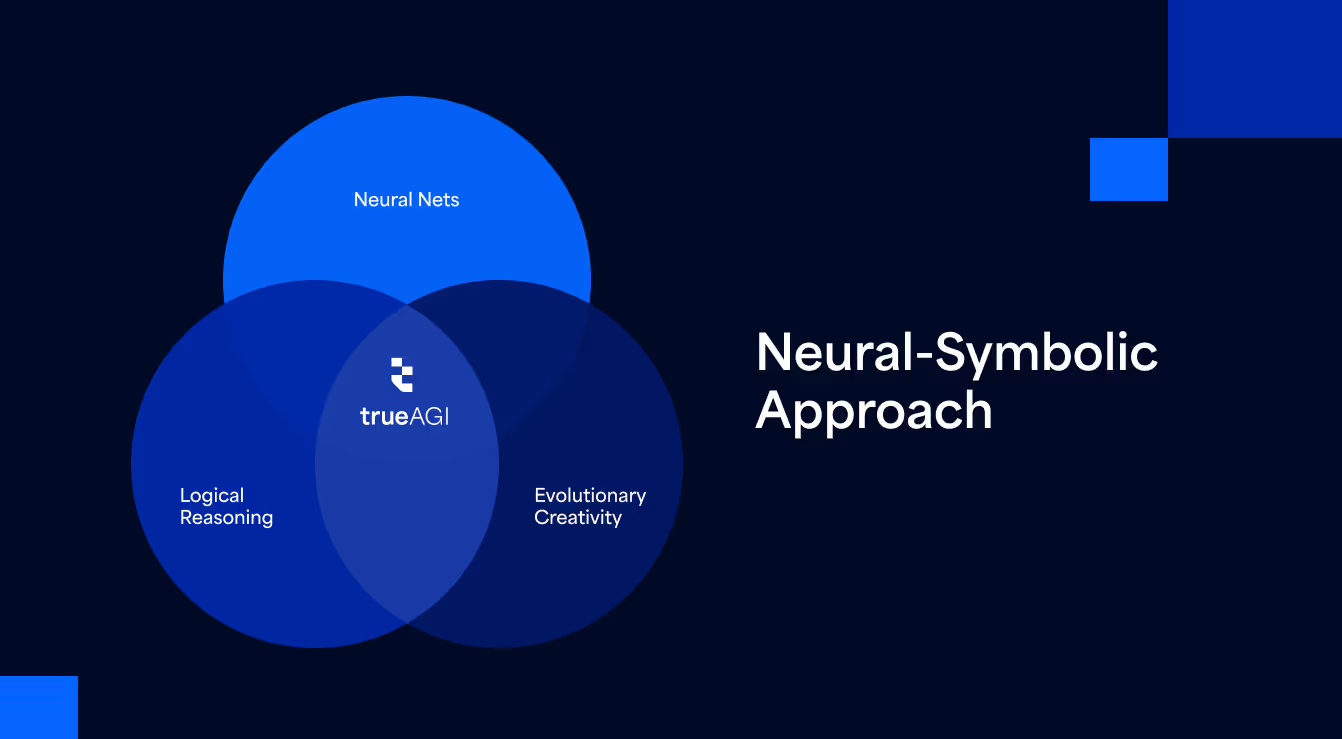

implements trueAGI’s neuro-symbolic cognitive architecture for adaptability, security, and cost-effectiveness without sacrificing speed or usability

integrates seamlessly with enterprise workflows to generalize and apply knowledge across domains, and scale and evolve with the needs of the enterprise

can be deployed in the cloud, on-premises, or in a hybrid configuration to meet the security, compliance, and flexibility requirements of any enterprise

improves strategic thinking and decision making by inferring beyond training data and distinguishing fact from fiction

significantly reduces energy, hardware, and data requirements

Advanced solutions for complex enterprises

trueAGI’s solutions offer businesses the unprecedented ability to analyze, adapt, and profit in an increasingly complex reality. We are developing game-changing MVP solutions now that are laying the groundwork to transform creativity, planning, research and decision-making across industries tomorrow: